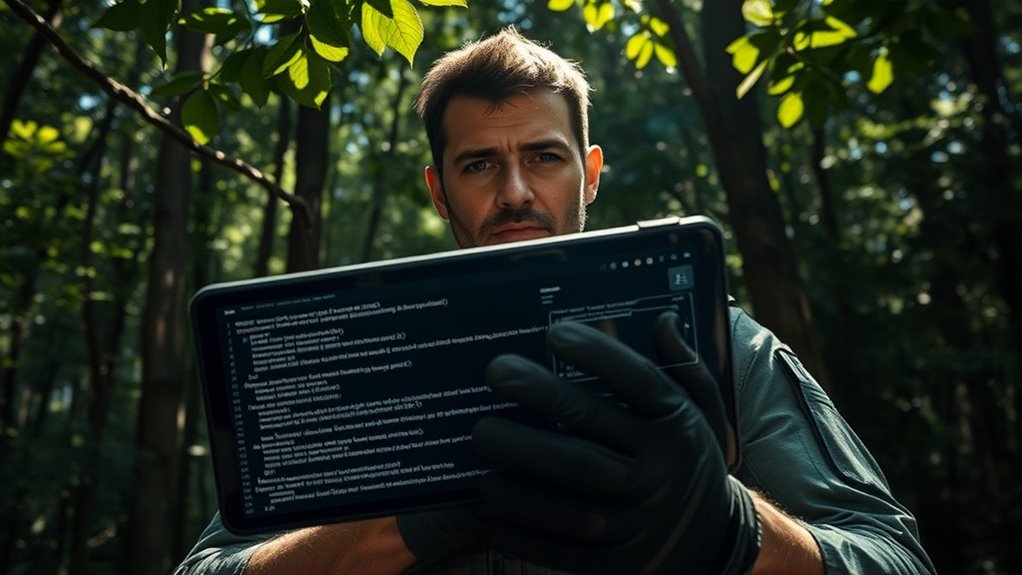

Prompt injection is a security risk where attackers sneak deceptive commands into normal conversations with AI. They craft these prompts to bypass filters, potentially exposing sensitive info or forcing the AI to act against safety rules. It’s like hiding malicious instructions in innocent questions. Spotting these tricks requires attention to unusual phrasing or code snippets. Keep learning how these covert attacks work, and you’ll better understand how to defend your systems against them.

Key Takeaways

- Prompt injection is a security flaw where malicious inputs trick AI into revealing sensitive info or bypassing safety rules.

- Attackers embed deceptive commands within normal prompts, making malicious instructions appear innocent.

- Recognizing subtle language tricks and suspicious patterns helps identify potential prompt injection attempts.

- Technical defenses include input validation, security updates, and combining safeguards with user awareness training.

- Staying informed about new manipulation techniques ensures proactive protection against evolving prompt injection threats.

Prompt injection is a security vulnerability that allows malicious actors to manipulate an AI’s behavior by inserting deceptive or harmful prompts. Think of it as sneaking a command into a conversation that influences the AI to do something unintended. When you interact with AI systems, especially those that generate responses based on user input, it’s possible for someone to craft a prompt that tricks the AI into revealing sensitive information, bypassing restrictions, or acting in ways you wouldn’t expect. Attackers exploit this by disguising malicious instructions as part of normal user input, making it tricky for the AI to distinguish between genuine queries and manipulative prompts.

Prompt injection tricks AI systems by embedding malicious commands within normal inputs, risking security breaches and unintended behavior.

Here’s how it works in practice. Normally, an AI is designed to follow specific rules and filters to prevent harmful output. But if an attacker understands how the prompt processing works, they can craft a message that “hijacks” the AI’s logic. For example, they might embed a hidden command within a seemingly innocent question, prompting the AI to reveal restricted data or override safety measures. Once the AI processes this malicious prompt, it unwittingly follows the attacker’s instructions, leading to potential data leaks, misinformation, or other security breaches. It’s like slipping a fake order into a conversation with a secure system, causing it to act against its intended safeguards.

Your role as a field officer involves understanding how these prompts can be disguised and how to spot them. Since attackers often use subtle language tricks or embed commands within normal text, training your eyes to spot suspicious patterns is essential. Recognizing when a prompt contains unusual phrasing, contradictory instructions, or embedded code snippets helps you identify potential threats. It’s also important to keep the AI system updated with the latest security patches and to implement strict input validation. Doing so minimizes the chances that malicious prompts can slip through unnoticed and cause damage.

Furthermore, understanding prompt injection helps you defend against it. You should know that no system is entirely foolproof—attackers are always developing new techniques. As a result, combining technical safeguards with thorough user training creates a stronger defense. Always question unusual requests, especially those that seem to push the boundaries of normal interaction. Remember, prompt injection isn’t just about hacking; it’s about manipulating the AI’s understanding to serve malicious goals. Staying vigilant means staying one step ahead, learning to spot and neutralize these deceptive prompts before they cause harm. Your awareness and proactive measures are key to keeping AI systems secure against prompt injection threats.

Additionally, being aware of potential content manipulation techniques can help you better defend the system from such attacks.

Frequently Asked Questions

How Can Organizations Detect Prompt Injection Attacks Early?

To detect prompt injection attacks early, you should monitor your AI systems closely for unusual or unexpected responses. Implement real-time logging and anomaly detection to spot suspicious inputs or outputs. Train your team to recognize signs of manipulation and set strict input validation rules. Regularly update your security protocols and conduct audits to identify vulnerabilities. Staying vigilant helps you catch potential threats before they cause significant harm.

What Are the Legal Implications of Prompt Injection Exploitation?

You could face legal consequences if you exploit prompt injection vulnerabilities, such as lawsuits for unauthorized access or data breaches. Organizations might also be held liable if they fail to safeguard user data or comply with regulations like GDPR or CCPA. Penalties can include hefty fines, legal actions, or damage to reputation. Always guarantee you follow laws and ethical guidelines to avoid legal risks when handling or discovering vulnerabilities.

Can Prompt Injection Be Used Maliciously Outside AI Systems?

Prompt injection can be used maliciously outside AI systems, much like a Trojan horse sneaking into your digital fortress. You could manipulate chatbots, automated email responses, or even voice assistants to reveal sensitive data or perform unintended actions. This threat isn’t limited to AI; cybercriminals can exploit it anywhere that input validation isn’t strict, turning seemingly harmless commands into tools for deception, theft, or disruption. Stay vigilant and secure your systems accordingly.

How Does Prompt Injection Differ From Traditional Cyberattacks?

Prompt injection differs from traditional cyberattacks because it specifically targets AI systems by manipulating input prompts to produce unintended responses. Unlike hacking or malware, it exploits vulnerabilities in the AI’s understanding, often bypassing security measures through clever prompts. You need to be cautious, as prompt injection can subtly alter AI behavior, making it more insidious and harder to detect than conventional cyber threats.

What Training Is Recommended for Field Officers to Identify Prompt Injection?

You should focus on training that sharpens your skills in spotting suspicious prompts and understanding how attackers manipulate inputs. Practice analyzing chatbot interactions and recognizing unusual patterns or language that don’t fit the context. Attend workshops on cybersecurity threats and stay updated on the latest attack techniques. Regular drills and simulations will help you respond quickly. Remember, staying vigilant and questioning odd prompts keeps your systems secure and your team prepared.

Conclusion

Just like a soldier stays alert on the front lines to prevent surprises, you must remain cautious of prompt injections. They can be subtle, sneaking past defenses and changing the outcome. While technology advances, so do the tactics of those trying to manipulate it. Staying vigilant isn’t just about protecting data—it’s about safeguarding trust. In this battle of minds and machines, your awareness is the strongest shield against unseen threats.