Algorithms can exhibit bias, leading to unfair outcomes that can have serious societal consequences. This bias often stems from flawed data, developer prejudices, or incomplete measurements. It can show up in various stages of AI development, resulting in issues in critical areas like healthcare or law enforcement. Being aware of these pitfalls is crucial for ethical AI use. If you stick around, you'll uncover more about mitigating these biases and promoting fairness in AI systems.

Key Takeaways

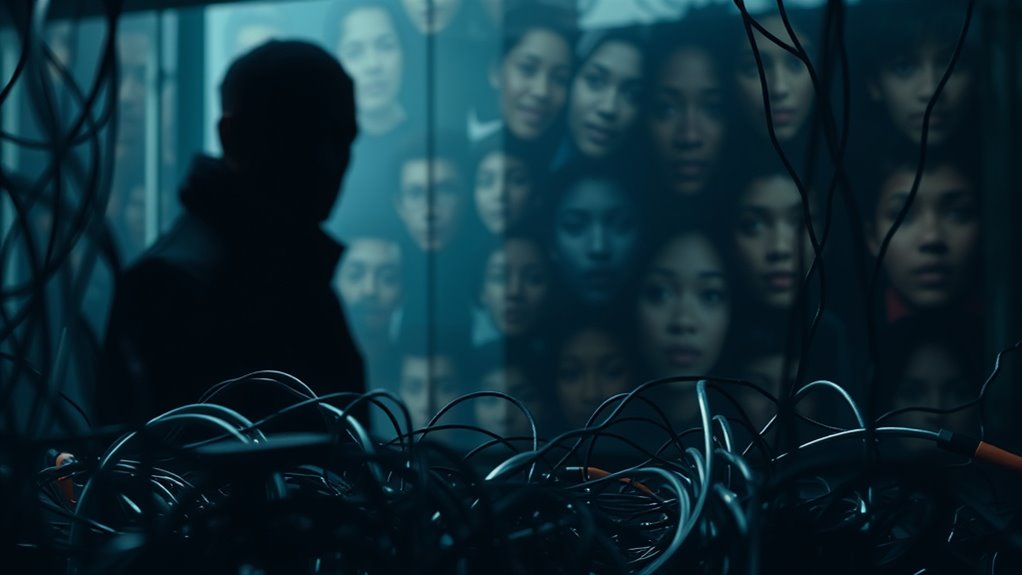

- AI spy bias can lead to discriminatory surveillance outcomes, reinforcing social inequalities through biased algorithmic decisions.

- Algorithms may exhibit sample bias, drawing from unrepresentative data that fails to capture diverse populations.

- Human cognitive biases during data labeling can introduce prejudice bias, affecting the fairness of surveillance algorithms.

- Continuous monitoring and bias audits are essential to identify and mitigate biases that persist in AI surveillance systems.

- Ethical deployment of AI in surveillance requires transparency to build consumer trust and reduce legal risks associated with biased outcomes.

Understanding AI Bias in Algorithms

As you delve into the realm of artificial intelligence, it's crucial to grasp what AI bias means and how it can affect algorithmic decision-making.

AI bias refers to systematic errors in algorithms that lead to unfair or discriminatory outcomes, often mirroring societal biases. When algorithms are biased, they can result in harmful decisions, especially in critical sectors like healthcare and law enforcement. Additionally, biased algorithms may create feedback loops, reinforcing existing disparities over time. Ignoring AI bias can also expose you to legal and financial risks, particularly with regulations like the EU AI Act. Biases in training data can lead to unfair outcomes, underscoring the need for vigilance in data selection.

AI bias leads to systematic errors in algorithms, resulting in unfair outcomes that can harm critical sectors like healthcare and law enforcement.

To combat this, it's vital to implement transparency, use diverse datasets, and ensure human oversight in decision-making processes.

The Stages Where Bias Emerges

Bias in AI can emerge at various stages of its development, affecting the fairness and effectiveness of the technology.

It often starts during data collection and curation, where unrepresentative datasets can reflect existing social biases. Preexisting bias in data can lead to morally unacceptable assumptions and complicate bias identification.

In the design phase, technical constraints and human cognitive biases in data labeling can further skew results.

During model training, nonlinear processes might create unforeseen biases, making it difficult for you to detect issues.

When the AI is deployed, automation bias can occur as users may trust outputs without question.

Finally, if biases aren't addressed in post-deployment reviews, they can persist and even amplify through feedback loops.

Regular audits and ethical oversight are crucial to identify and correct these biases.

Types of AI Bias and Their Implications

Understanding the different types of AI bias is crucial because they can significantly impact outcomes across various applications. Algorithmic bias occurs when algorithms favor specific groups, while sample bias stems from unrepresentative training data. Prejudice bias results from the biases of developers, and measurement bias arises from incomplete data collection. Exclusion bias happens when certain groups or data points are left out. The implications of these biases are serious; they can lead to discrimination, inaccurate predictions, and a lack of transparency in AI systems. Additionally, they can reinforce societal stereotypes and erode trust in technology. AI bias can lead to real-world consequences, making it essential to address these biases to ensure fairness, accountability, and equitable opportunities for all users affected by AI decisions.

Real-World Examples of AI Bias Failures

While AI technologies promise efficiency and innovation, they've also revealed significant failures due to bias in real-world applications.

In healthcare, algorithms favored white patients, reflecting historical disparities and leading to an 80% bias reduction through adjustments. This underscores the importance of selecting the right dataset to mitigate bias in AI systems.

In hiring, Amazon's algorithm penalized female candidates, resulting in the project's dissolution in 2017.

The justice system's COMPAS algorithm predicted higher recidivism rates for black defendants, causing unfair sentencing.

Financially, the Apple Card offered lower credit limits to women, violating fairness principles.

Lastly, Google's image search for "CEO" showcased predominantly male results, reinforcing stereotypes.

These examples illustrate the urgent need for diverse and representative training data to prevent AI from perpetuating existing inequalities.

Strategies for Mitigating AI Bias

Addressing the significant failures highlighted in real-world AI applications requires a proactive approach to mitigating bias.

Start by ensuring your datasets are diverse and representative of the population. Use preprocessing techniques like normalization and anonymization to reduce biases and consider data augmentation for enhanced representation. Continuous monitoring of AI behavior is crucial to identify and rectify emerging biases, especially given the potential vulnerabilities in AI systems.

Ensure diverse datasets and employ preprocessing techniques to minimize bias and enhance representation in AI applications.

When designing algorithms, incorporate fairness-aware constraints and utilize ensemble methods to blend multiple models, minimizing individual biases. Diverse data is essential for reflecting real-world scenarios and reducing the risk of biased AI outcomes.

Implement a human-in-the-loop system for oversight and conduct regular bias audits to evaluate AI outputs.

Foster transparency by documenting potential biases and providing clear explanations for AI decisions.

Lastly, promote collaboration among diverse teams and educate stakeholders about AI biases, ensuring a more inclusive approach to AI development and deployment.

Challenges in Addressing AI Bias

As you dive into the complexities of AI bias, it becomes clear that tackling these challenges requires a multifaceted approach.

Data collection presents issues like historical inequities, sampling errors, and cultural influences that can skew results. Additionally, algorithmic design complicates matters; deep learning models often obscure biases, while human influence can unintentionally embed developer biases into algorithms. Recognizing risks associated with AI biases is essential for developing effective solutions. Continuous advancements in algorithms improve our understanding of how biases can manifest in AI systems.

Human interaction poses its own challenges, as implicit and cognitive biases from decision-makers can distort AI outputs.

Finally, societal and ethical implications, like amplifying social inequalities and regulatory compliance, add layers of complexity. Addressing these challenges demands commitment, diverse perspectives, and ongoing efforts to ensure fairness and transparency in AI systems.

The Importance of Continuous Monitoring

Continuous monitoring is crucial for ensuring that AI systems operate effectively and fairly, especially in a landscape where biases can emerge unexpectedly. By analyzing real-time data, you can assess performance and establish key performance indicators (KPIs) to guide improvements. Feedback loops enable you to refine algorithms, enhancing performance over time. This proactive approach helps detect issues like data drift early, preventing operational impacts. With effective monitoring practices, you'll experience fewer system failures and resolve problems up to 40% faster. Plus, you'll build user trust by ensuring reliable performance, while gaining enhanced visibility into operations. Integrating monitoring with data governance further strengthens compliance, making your AI systems more robust and trustworthy. Continuous monitoring ensures organizations with effective practices experience fewer failures and faster problem-resolution times.

The Future of Fair and Transparent AI Systems

While the rapid evolution of AI technology presents exciting opportunities, it also raises essential questions about fairness and transparency in its application.

You'll find that explainable AI (XAI) enhances trust by providing clear insights into AI decisions. Interpretability is key, ensuring you understand how algorithms work internally. Additionally, algorithmic transparency ensures that users are aware of the logic behind AI systems, further enhancing comprehension.

Moreover, transparent systems help identify and mitigate biases, vital for ethical AI deployment. As regulatory frameworks evolve, focusing on transparency will likely reduce legal risks and foster accountability.

Collaborating on open-source models can further enhance trust. By prioritizing these values, AI can unlock its full potential, driving ethical innovation and leading to fairer societal outcomes.

Embracing this future is essential for building consumer confidence in AI technology.

Frequently Asked Questions

How Can Individuals Identify Biased AI Systems in Everyday Use?

To identify biased AI systems in everyday use, you can start by examining the outcomes they produce.

If you notice consistent inaccuracies or unfair treatment towards certain groups, that's a red flag.

Research the algorithms behind the tools you use; find out if they're based on diverse data.

Also, pay attention to user reviews and reports about bias.

Engaging in discussions about AI ethics can help raise awareness and promote accountability.

What Role Do Engineers Play in Preventing AI Bias?

Engineers play a crucial role in preventing AI bias by designing algorithms that prioritize fairness and inclusivity.

You'll find them collaborating with data scientists and ethicists to identify potential biases and implement strategies like data diversification and algorithm retraining.

By actively participating in audits and adhering to ethical guidelines, they ensure AI systems are continuously validated.

Their commitment to transparency and accountability helps you trust the technology that impacts your daily life.

Are There Legal Repercussions for Biased AI Decisions?

Imagine a ship sailing smoothly until it hits a hidden reef—this is how biased AI can unexpectedly steer companies into dangerous waters.

Yes, there are legal repercussions for biased AI decisions. You could face hefty fines, reputational damage, and legal disputes if your AI systems violate anti-discrimination laws or privacy regulations.

As a result, it's crucial to navigate these regulations wisely to avoid sinking your business in turbulent legal seas.

How Can Consumers Advocate for Fair AI Practices?

You can advocate for fair AI practices by getting involved with consumer advocacy organizations.

Share your experiences and concerns about AI systems to help shape policies. Engage in community discussions and support initiatives that promote transparency and accountability.

Collaborate with others to push for regulatory measures that protect consumers.

What Future Technologies May Help Reduce AI Bias?

Did you know that over 70% of AI models trained on biased data can perpetuate unfair outcomes?

To reduce AI bias, future technologies like advanced machine learning frameworks and real-time monitoring systems will play a crucial role.

You'll see enhanced algorithms that prioritize fairness through counterfactual reasoning and data re-weighting.

Additionally, collaborative open-source platforms will enable you and others to contribute diverse datasets, fostering transparency and inclusivity in AI development.

Conclusion

In the ever-evolving landscape of AI, recognizing and addressing bias is crucial to ensure fairness and transparency. If we don't take the bull by the horns, we risk perpetuating injustice and inequality. By implementing proactive strategies and continuously monitoring algorithms, we can pave the way for a future where technology truly serves everyone. Let's work together to build systems that reflect our shared values and create a more equitable world for all.