As public sector organizations increasingly rely on artificial intelligence (AI) to streamline operations and enhance decision-making, the risk of data corruption looms large. You mightn’t realize it, but the integrity of the data fed into AI systems is crucial. If the data quality is poor, it can lead to biased or inaccurate outputs, which can severely impact crucial decisions within government operations.

When AI models are trained on corrupted data, they may reinforce existing social or political biases, skewing outcomes in a way that can have real-world consequences. The lack of transparency in complex AI systems complicates accountability even further. It’s often difficult to trace the original source of data corruption or bias, leaving organizations vulnerable to mistakes that can erode public trust. Additionally, the ongoing development of regulatory frameworks in various countries is essential to mitigate these risks.

You may find it alarming how hard it’s to develop robust regulatory frameworks to ensure data integrity amidst the rapidly evolving nature of AI. This challenge underscores the importance of human oversight. Regular checks are essential to catch and correct data corruption issues before they affect decision-making processes.

While AI applications can vastly improve efficiency in tasks like document processing and customer service, the risks involved can’t be overlooked. For instance, in sectors like healthcare and education, AI can provide personalized solutions, but if the underlying data is tainted, the results can be misleading or harmful.

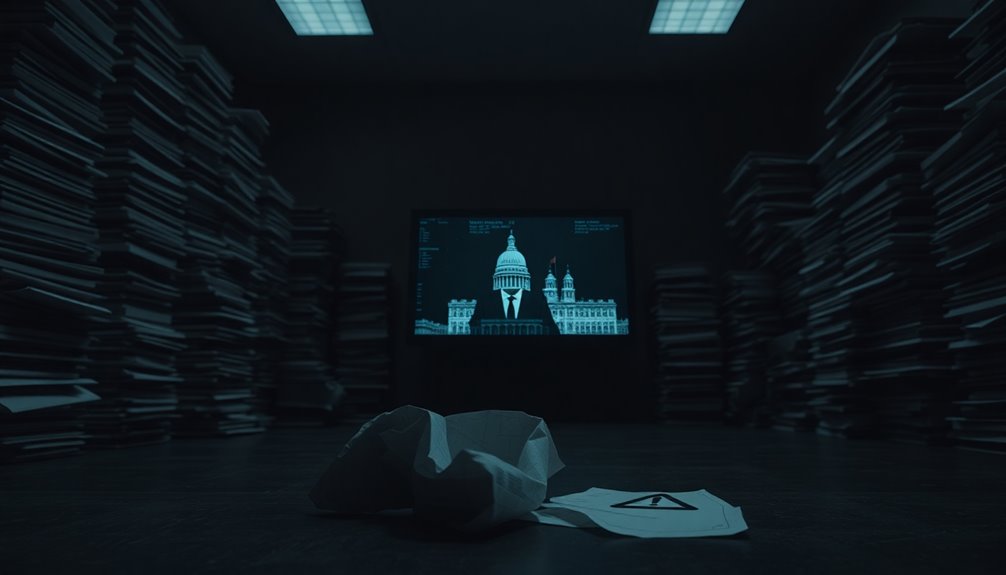

Urban development and defense sectors also stand to benefit from AI, yet the potential for misuse remains high. Cybersecurity threats, propaganda, and surveillance concerns are just a few areas where AI can be weaponized, raising significant ethical questions.

To combat these risks, anti-corruption efforts leveraging AI can be beneficial. AI can analyze vast datasets to identify corruption patterns and flag suspicious activities, making it a powerful ally in promoting transparency. Tools like Brazil’s “Alice” can actively monitor public procurement processes, helping to expose corruption before it becomes entrenched.

However, implementing such solutions isn’t without its challenges. You’ll need to consider technical barriers and ethical concerns that arise when developing AI models. As you engage with AI in the public sector, remember that the potential for misuse is real.

Balancing efficiency with ethical considerations and data integrity becomes your responsibility. The effectiveness of AI hinges on the quality of data and the vigilance exercised in monitoring its use.