Autonomous weapons powered by AI are transforming warfare by enabling faster decisions and more precise attacks, but they also raise serious ethical concerns. You might worry about machines lacking moral judgment, risking civilian lives, and reducing human oversight. International regulations are still evolving, and questions of accountability remain unclear. If you’re curious about how these issues shape future conflicts and global policy, exploring further can provide a deeper understanding of this complex debate.

Key Takeaways

- Autonomous weapons enhance warfare efficiency but raise ethical concerns about morality and accountability in life-and-death decisions.

- International regulations are needed to ensure AI military systems comply with humanitarian laws and prevent reckless use.

- The lack of human judgment in autonomous systems risks civilian casualties and dehumanizes warfare.

- Clear accountability frameworks are essential for responsibility in case of war crimes committed by autonomous weapons.

- Establishing ethical red lines and international treaties can help manage the risks of AI in warfare and prevent escalation.

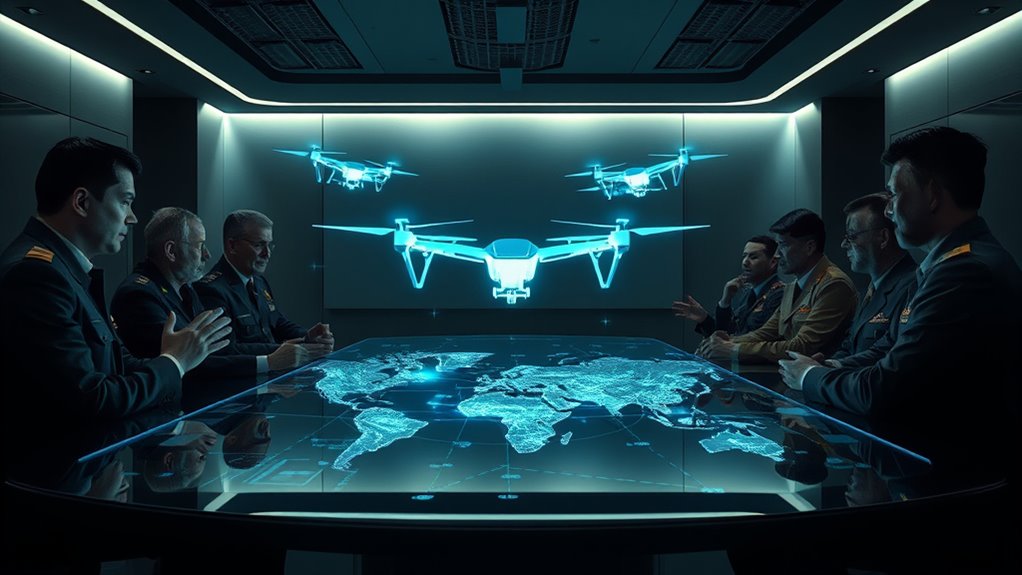

Artificial intelligence is transforming modern warfare by enabling faster decision-making, improving targeting accuracy, and automating complex tasks. As these technologies advance rapidly, they raise critical questions about the moral implications of deploying autonomous weapons. You might wonder whether machines can truly understand the nuances of human life and whether it’s ethical to let algorithms decide when to take a life. The debate centers on whether AI-driven systems can make morally sound decisions or if their actions are inherently detached from human values. Critics argue that removing humans from the decision loop risks dehumanizing warfare, potentially leading to unintended civilian casualties or violations of international regulations designed to protect non-combatants. The challenge lies in establishing clear international standards that govern the development and use of autonomous weapons, ensuring they align with humanitarian laws. Without such regulations, there’s a danger that autonomous systems could be used recklessly or fall into the wrong hands, escalating conflicts or causing unforeseen harm.

You also need to contemplate the pace at which AI technology evolves. While some argue that autonomous weapons could reduce human error and minimize casualties by executing precision strikes, others warn that the lack of moral judgment in machines introduces new risks. International regulations are essential here; they can set boundaries on what autonomous capabilities are acceptable and prevent an arms race fueled by unchecked technological advancements. Many experts advocate for treaties or agreements that ban or limit fully autonomous lethal systems, emphasizing that human oversight must remain central in life-and-death decisions. Such regulations would serve as a moral compass, guiding nations to develop AI in ways that respect international humanitarian law and uphold ethical standards.

You’re also faced with the reality that autonomous weapons could shift the nature of warfare itself. As machines take on more complex roles, questions about accountability become even more pressing. If an autonomous drone commits a war crime, who is responsible—the programmer, the commander, or the manufacturer? International regulations could help clarify these responsibilities, but they require global cooperation and consensus. Without a unified framework, different countries might pursue autonomous systems with varying ethical standards, increasing the risk of misuse or escalation. Ultimately, the moral implications and the need for robust international regulations are intertwined, shaping how society manages the integration of AI into warfare. It’s your responsibility to stay informed and advocate for policies that prioritize human dignity, ethical considerations, and global stability as AI continues to redefine the battlefield.

Frequently Asked Questions

How Can International Law Regulate Autonomous Weapons Effectively?

You can guarantee international law regulates autonomous weapons effectively by strengthening treaty enforcement and developing clear legal frameworks. These frameworks should define accountability, responsible use, and ethical boundaries. You must promote international cooperation, monitor compliance, and impose penalties for violations. By establishing universal standards and holding nations accountable, you help prevent misuse, ensuring autonomous weapons are used ethically and responsibly within the bounds of international law.

What Are the Potential Risks of AI Escalation in Conflicts?

Imagine it’s the 18th century, and you’re witnessing an AI arms race. You risk escalation, where conflicts spiral out of control faster than before, with autonomous weapons responding unpredictably. The main risks include unintended escalation, miscalculations, and loss of human control. This escalation could lead to widespread violence, making conflicts deadlier and harder to contain, threatening global stability and increasing the chances of catastrophic misunderstandings.

Can Autonomous Weapons Distinguish Between Combatants and Civilians Reliably?

You might wonder if autonomous weapons can reliably distinguish between civilians and combatants. Currently, these systems often struggle with civilian classification and combatant identification due to complex environments and ambiguous situations. They rely on algorithms that may misinterpret context, risking harm to innocent people. While advancements are ongoing, ensuring accurate differentiation remains a significant challenge, highlighting the need for strict oversight and ethical safeguards in deploying such technologies.

How Do Different Countries View the Ethical Implications of AI in Warfare?

You see that countries have diverse cultural perspectives shaping their views on AI in warfare. Some prioritize ethical considerations like minimizing civilian harm, while others focus on technological advantages. Your understanding reveals that ethical priorities vary greatly, influencing policies and international debates. These differences can lead to disagreements on deploying autonomous weapons, highlighting the importance of respecting cultural contexts while aiming for global ethical standards in warfare technology.

What Role Do Private Companies Play in Developing Autonomous Military Systems?

You recognize that private companies play a significant role in developing autonomous military systems through corporate influence and technological innovation. They often push boundaries to create advanced AI-powered weapons, driven by profit and competitive advantage. While this accelerates military tech progress, it raises concerns about ethical oversight and accountability. Your awareness of their impact highlights the need for regulation to guarantee these innovations align with international ethical standards and prevent misuse.

Conclusion

As you navigate the complex landscape of AI and warfare, remember the importance of ethical boundaries, the need for clear regulations, and your role in shaping responsible innovation. You can champion transparency, demand accountability, and promote human oversight. Because when you prioritize ethics over expediency, when you value human life over autonomous decision-making, you help guarantee that technology serves peace, not destruction. In this balance, your voice and actions truly make a difference.